Mobile devices, such as smartphones, have increased their capacity of information processing and sensors have been aggregated to their hardware. Such sensors allow capturing information from the environment in which they are introduced. As a result, mobile applications that use the environment and user information to provide services or perform context-based actions are increasingly common. This type of application is known as context-aware application. While software testing is an expensive activity in general, testing context-aware applications is an even more expensive and challenging activity. Thus, efforts are needed to automate testing for context-aware applications, particularly in the scope of Android, which is currently the most used operating system by smartphones.

This paper aims to identify and discuss the state-of-the-art tools that allow the automation of testing Android context-aware applications.

In order to do so, we carried out a systematic mapping study (SMS) to find out the studies in the existing literature that describe or present Android testing tools. The discovered tools were then analyzed to identify their potential in testing Android context-aware applications.

A total of 68 works and 80 tools were obtained as a result of the SMS. From the identified tools, five are context-aware Android application testing tools, and five are general Android application testing tools, but support the test of the context-aware feature.

Although context-aware application testing tools do exist, they do not support automatic generation or execution of test cases focusing on high-level contexts. Moreover, they do not support asynchronous context variations.

Mobile applications have become more than entertainment stuff in our lives. Such applications have become increasingly pervasive in such a way that humans are quite dependent on mobile devices and their applications. According to the research conducted by the Statistics web site portal [1], the number of mobile users can reach the five billion mark by 2019.

While mobile applications have been developed primarily for the entertainment industry, they are now touching more critical sectors such as payment systems. The exponential growth of this market and the criticality of system development demands greater attention to the reliability aspects of applications of these mobile devices. As demonstrated in some studies [2], [3], [4], mobile applications are not bug-free, and new software engineering approaches are required to test these applications [5].

Software testing is a commonly applied activity to assess whether a software behaves as expected. However, testing mobile applications can be challenging. Accordingly to Muccini et al. [4], mobile applications have a few peculiarities that make testing more difficult when compared to other kinds of computer software. Some of the peculiarities are connectivity, limited resources, autonomy, user interface, context-awareness, new operating systems updates, diversity of phones, and operating systems and touch screens.

Therefore, as the test difficulty increases, the demand for tools to automate the testing process of mobile applications also increases. Currently, most researchers’ and practitioners’ efforts in this area target the Android platform, for multiple reasons [6]: (a) At the moment, Android has the largest share of the mobile market (representing approximately 76% of the total of mobile operating system market share worldwide from June 2018 until June 2019 accordingly to the Statcounter Web Site [7]), which makes Android extremely appealing for industry practitioners; (b) as Android is installed in a range of different devices and has different releases, Android apps often suffer from cross-platform and cross-version incompatibilities, which makes manual testing of these apps particularly expensive and thus, particularly worth automating; and (c) Android is an open-source platform, which makes it a more suitable target for academic researchers, making it possible the complete access to both apps and the underlying operating system.

Nowadays, mobile devices are equipped with several sensors such as touch screen, compass, gyroscope, GPS, accelerometer, pedometer, and so on. These sensors make the development of context-aware applications possible. This paper considers context as any information that may characterize the situation of an entity. An entity can be defined as a person, a place, or an object that is relevant when considering the interaction between a user and an application [8]. A system is context-aware if it considers context information to perform its task of providing relevant information or services to a user [9]. Therefore, a context-aware application takes the information provided by the sensors to make relevant information or to direct its behavior.

This paper intends to identify tools capable of testing context-aware applications. Therefore, a systematic mapping study (SMS for short) was carried out in order to answer the following main research questions: (a) what are the Android testing tools published in the literature? And (b) what are the Android context-aware testing studies and tools published in the literature? From the answers to these research questions, we were able to analyze if the existing tools can test context-aware applications.

The SMS resulted in a total of 68 works. From them, we could see which are the research groups that work on Android application testing and which are more directly related to context awareness. Moreover, we identified 80 general Android testing tools. We analyzed these tools, identified which techniques are used mostly, which methods are implemented, which tools are available for download, and which tools are used mostly. Among the 80 general Android testing tools, we have identified five tools for testing Android context-aware applications and five tools for testing general Android applications, also allowing the testing of context-aware features. We have noticed that these tools are not able to automatically generate or execute test cases that use high-level context and that support asynchronous context variation.

The remainder of this paper is organized as follows: the “Background and related work” section presents the main concepts needed to understand this paper and the related work. The “Research method” section describes how the SMS was conducted. The “Results” section and the “Analysis and discussion” section present the results of the SMS and expose our discussions about the found tools in the context-aware Android application testing field, respectively. Finally, the “Conclusions and future works” section concludes the paper.

This section presents the main concepts related to this work. Also, it presents related work that addresses problems and solutions of concepts that touch the objective of this paper.

Android is Google’s mobile operating system, and it is currently the world leader in this segment. Android is available for several platforms such as smartphones, tablets, TV (Google TV), watches (Android Wear), and glasses (Google Glass), cars (Android Auto), and it is the most widely used mobile operating system in the world.

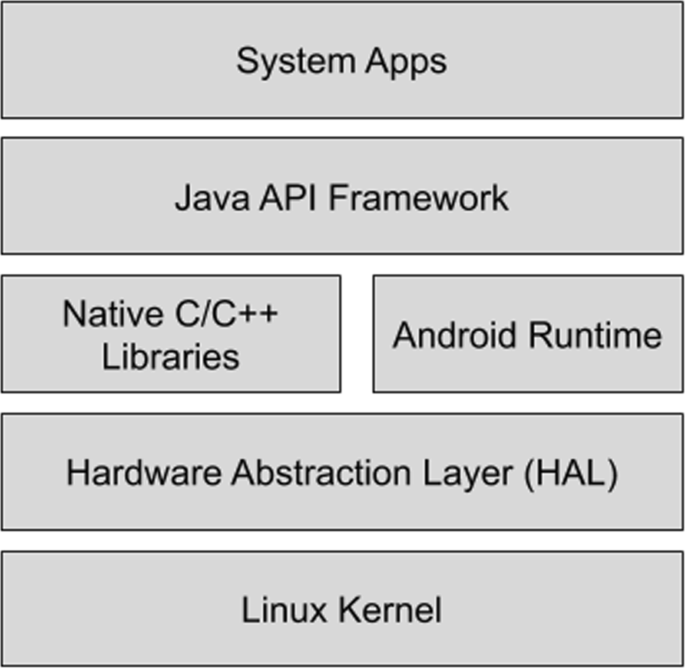

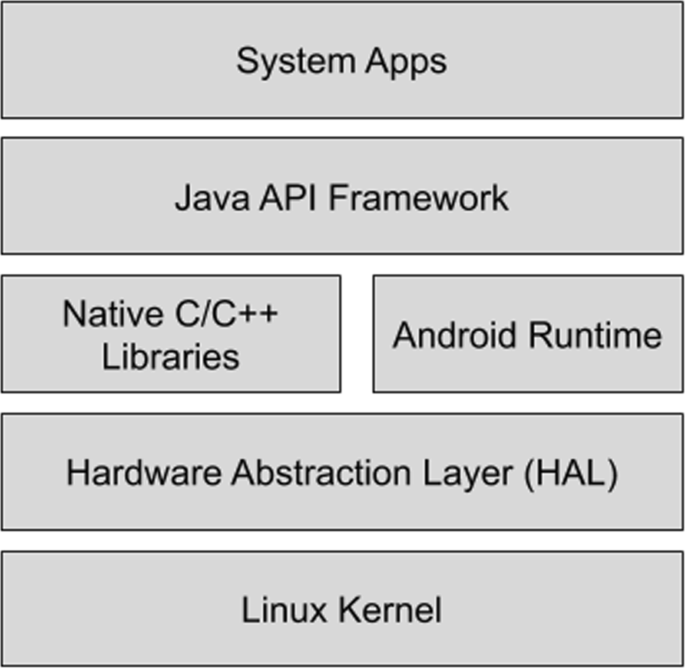

Although Android applications are developed using the Java language, there is no Java virtual machine (JVM) in the Android operating system. In fact, until before the Android 4.4 (KitKat), what existed was a virtual machine called Dalvik, which is optimized to run on mobile devices. After that, Dalvik was replaced by ART (Android Runtime). Therefore, as soon as the bytecode (.class) is compiled, it is converted to the.dex (Dalvik Executable) format, which represents the compiled Android application. After that, the.dex files and other resources like images are compressed into a single.apk (Android Package File) file, which represents the final application. Android applications run on top of the Android framework, as can be seen in Fig. 1.

Android has a set of core apps for calendars, email, contacts, text messaging, internet browsing, and so on. Included apps have the same status of installed apps so that any app can become a default app [10].

All features of the Android OS are available to developers through APIs written in the Java language. Android APIs allow developers to reuse central and modular system components and services, making it easier to develop Android applications. Furthermore, Android provides Java framework APIs to expose the functionality of native code that requires native libraries written in C and C++. For example, a developer can manipulate 2D and 3D graphics in his/her app through the Java OpenGL API of the Android framework by accessing OpenGL ES.

As already told, from Android 4.4 (KitKat), each app runs on its process and with its instance of the Android Runtime (ART). ART is designed to run multiple virtual machines on low-memory devices by executing DEX files, a bytecode format designed especially for Android that is optimized for minimal memory footprint.

The hardware abstraction layer (HAL) provides interfaces that give access to the hardware features of the device to the higher-level Java API framework such as the camera, GPS, or Bluetooth module. When a framework API makes a call to access the device hardware, the Android system loads the library module for that hardware component.

The Android platform was built based on the Linux kernel. For example, the Android Runtime (ART) relies on the Linux kernel for underlying functionalities such as threading and low-level memory management. Another advantage of the Linux kernel is the possibility of reusing the key security features and allowing device manufacturers to develop hardware drivers for a well-known kernel.

Initially, Android applications were written only in Java and run on the top of the Android framework presented in the “Android operating system” section. After Google I/O 2017 [11], Android applications can be written using either Java or Kotlin Footnote 1 . An android application can be composed of four component categories: (a) activity, (b) broadcast receiver, (c) content provider, and (d) service.

There is a mandatory XML manifest file to build Android apps that provides information regarding their life cycle management.

Although Android applications are GUI-based and mainly written in Java, they differ from Java GUI applications, particularly by the kinds of bugs they can present [2, 12]. Existing test input generation tools for Java [13–15] cannot be directly used to test Android apps, and custom tools must be adopted instead. For this reason, the academic community has made a lot of effort to research Android application testing tools and techniques. Several test input generation techniques and tools for Android applications have been proposed [16–19].

The first mobile applications had desktop application features adapted for mobile devices. Muccini et al. [4] differentiate mobile applications into two sets: (a) traditional applications that have been rewritten to run on a mobile device, which are called App4Mobile, and (b) mobile applications that use context information to generate context-based output, called MobileApps. Thus, mobile applications have become, as time goes by, increasingly pervasive. Its behavior depends not only on user inputs but also on context information. Therefore, current applications are increasingly characterized as context-aware applications.

To better understand what a context-aware application is, it is first necessary to define what context is. Some authors consider the context to be the user’s environment, while others consider it to be the application’s environment. Some examples are as follows:

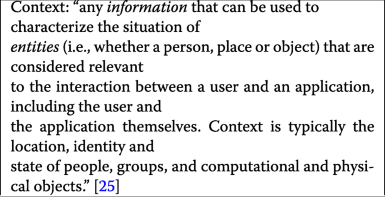

These definitions do not agree with each other and appear to be more author-specific definitions than a general definition of context. The definition most commonly accepted by several authors is the definition provided by Abowd et al.:

Besides being more general, this definition facilitates the understanding of what information can be considered as context information. The entities we identified as most relevant are places, people, and things. Places are regions of geographical space such as rooms, offices, buildings, or streets. People can be either individuals or groups, co-located or distributed. Things are either physical objects or software components and artifacts, for instance, an application or a file. The context information most commonly used by mobile applications is location, but there are several other types of context information that may be relevant to a mobile application such as temperature, brightness, time, date, mobile device tilt, geographic orientation (north, south, east, west), and so on.

Vaninha et al. [26] model context into four layers: low-level context, high-level context, situations, and scenario. In their model, context information is divided into two levels (low-level and high-level). Combinations of values of context information form situations and chronological sequences of situations form scenarios. Following the Vaninha et al. [26] model, we can divide context information into two levels:

Matalonga et al. [27] performed a quasi-systematic review aiming to characterize the methods used for testing context-aware software systems (CASS). From the 1820 analyzed technical papers, 12 studies that address testing of CASS were identified. Matalonga et al. argue that, after analyzing the 12 studies, it did not found evidence that there is a complete context-aware test method for testing CASS.

Guinea et al. [28] conducted a systematic review evaluating the different phases of the software development life cycle for ubiquitous systems. The main limitations and interests of each of the phases were classified according to each phase of the development cycle. The systematic review resulted in 132 approaches addressing issues related to different phases of the software development cycle for ubiquitous systems. From the 132 approaches, only 10 are related to the test phase. Guinea et al. classified the 10 approaches by their focus or concern and obtained 5 dedicated to context-aware testing, 3 to simulators, and 2 to test adequacy. For this reason, the authors state that testing is perhaps where the results reflect that more research is needed.

Shauvik et al. [6] present a paper that performs a thorough comparison of the main existing test input generation tools for Android. In the comparison, it is evaluated the effectiveness of these tools, and their corresponding techniques, according to four metrics: (a) code coverage, b ability to detect faults, (c) ability to work on multiple platforms, and (d) ease of use. Shauvik et al. affirm Android is event-driven. Consequently, inputs are normally in the form of events, such as clicks, scrolls, and text inputs, or system events, such as the notification of newly received text messages. Thus, testing tools can generate such inputs by randomly or following a systematic exploration strategy. Considering the last case, exploration can either be guided by a model of the app, which construction can be done statically or dynamically, or exploit techniques that aim to achieve as much code coverage as possible. Besides that, testing tools can generate events by considering Android apps as either a black box or a white box. Gray box approaches are also possible. The gray box strategy can be used to extract high-level properties of the app, such as the list of activities and the list of UI elements contained in each activity, in order to generate events that will likely expose unexplored behavior. While Shauvik et al. present unexpected results and also an analysis of weaknesses and limitations of the tools, none of the tools or any of the analyses and conclusions about the work take into account context-aware applications.

Due to the growing number of context-aware applications in recent years, many other researchers have been interested in investigating testing of such applications, [16, 17, 29, 30].

Wang et al. [17] introduced an approach to improve the test suite of a context-aware application by identifying context-aware program points where context changes may affect the application’s behavior. Their approach systematically manipulates the context data fed into the application to increase its exposure to potentially valuable context variations. To do so, the technique performs the following tasks: (1) it identifies key program points where context information can effectively affect the application’s behavior, (2) it generates potential variants for each existing test case that explore the execution of different context sequences, and (3) it attempts to dynamically direct application execution towards the generated context sequences.

Songhui et al. [16] investigate challenges and proposed resolutions for testing context-aware software from the perspective of four categories of challenges: context data, adequacy criteria, adaptation, and testing execution. They conclude there is no automatic testing framework that considers all of the discussed challenges, and they say they are building such a framework as an execution platform to ease the difficulty of testing context-aware software.

Thought a quasi systematic literature review, Santiago et al. [29] identified 11 relevant sources that mentioned 15 problems and 4 proposed solutions. The data were analyzed and classified into 3 groups of challenges and strategies for dealing with context-aware software systems testing.

Sanders and Walcott [30] propose an approach to test multiple states of Android devices based on existing test suites to address the testing of context-aware applications. They introduce the TADS (Testing Application to Device State) library. However, the approach currently handles only the Wi-Fi and Bluetooth sensors.

Holzmann et al. [31] and the AWARE framework Footnote 2 present very similar applications for sharing mobile context information. Holzmann et al. present an application called the “Automate toolkit” and, similar to the AWARE framework, they are able to store the usage data and context information of a device, so it is possible to store device usage scenarios such as visited screens and performed gestures. So, using scenarios that can cause failures in some application can be more carefully studied and repeated. While “Automate toolkit” emphasizes on the logging of interactions such as opened apps, visited pages, and number of interactions per page, AWARE focuses on presenting and explaining context information, that is, the main purpose of the AWARE framework is to collect data from a series of sensors on the mobile device and infer context information from them. Neither of these applications is intended to test context-aware applications, but rather to store usage scenarios.

Usman et al. [32] present a comparative study of mobile app testing approaches focusing on context events. The authors defined as comparison criteria the following six key points: events identification, method of analyzing mobile apps, the testing technique, classification of context event, validation method, and evaluation metrics. Usman et al. resemble our work on some results, such as the categorization of Android context-aware testing tools into testing approaches such as script-based testing, capture-replay, random, systematic exploration, and model-based testing. The primary search process was performed on databases and indexing system of Scopus and Google Scholar. The search was performed to return results between 2010 and 2017. In our work, a systematic mapping was performed to return results between 2008 and 2018. Therefore, we obtained a greater amount of tools and found studies. In addition, Usman et al. do not make a further reflection on the obtained results such as those we have done in our work (i.e., main authors in the field of research, key conferences, and most used tools).

Although relevant, except Usman et al. [32], none of these studies discussed so far have conducted an investigation of the ability of current testing tools for handling Android context-aware applications. Matalonga et al. [27] presented a quasi-systematic review to characterize methods but did not discuss whether the current tools are capable of testing context-aware applications. Shauvik et al. [6] perform a thorough comparison of the main current test input generation tools for Android but does not analyze context awareness features. The other authors present studies of challenges and solutions, some with tools, but with their respective limitations.

The purpose of an SMS is to comprehensively identify, evaluate, and interpret all work relevant to the defined research questions. Thus, this section is based on the work of Petersen et al. [33] and details the central research questions of this paper, as well as the procedure followed to identify the relevant studies required to do so.

This systematic mapping aims at summarizing the current state of the art concerning test automation tools for Android context-aware applications. In order to do so, we conducted an SMS following the recommendations defined by Petersen et al. [33] and, therefore, proposed the following research questions (RQs):

For each main research question, we formulated sub-questions as listed above. Those sub-questions are answered to support our main questions. In RQ1, we aim to find out what are the existing Android testing tools currently discussed in the literature. In RQ2, we aim to identify and better understand the existing research about Android context-aware testing. To answer the research questions, we searched for studies from four digital libraries, as can be seen in the “Sources of information” section.

In order to gain a broad perspective, as recommended in Kitchenham’s guidelines [34], we widely searched for references in electronic sources. The following databases were covered:

These databases cover the most relevant journals, conferences, and workshop proceedings within Software Engineering.

In order to select just articles related to potentially Android context-aware applications testing tools, some keywords were defined.

As a result of the combination between the keywords and the connectors AND and OR, the following search string was defined:

However, when executing the search string, the number of results was too small. Thus, we decided to split the search string into two search strings: in the first one, we would address studies related to context-aware Android applications; the second one, studies related to Android application testing tools.

Thus, the resulting search strings were the following.

Studies were selected for the SMS if they met the following inclusion criteria:

In terms of exclusion criteria, studies were excluded if they

In order to obtain more confidence in the research results, the study selection was divided into three steps: electronic search, selection, and extraction. The electronic search was conducted executing the search strings in the sources of information. The search process performed on all databases was based on the advanced search feature provided by the online databases. The search strings were applied using an advanced command search feature and set to include meta-data of studies with initial data set up since 2008.

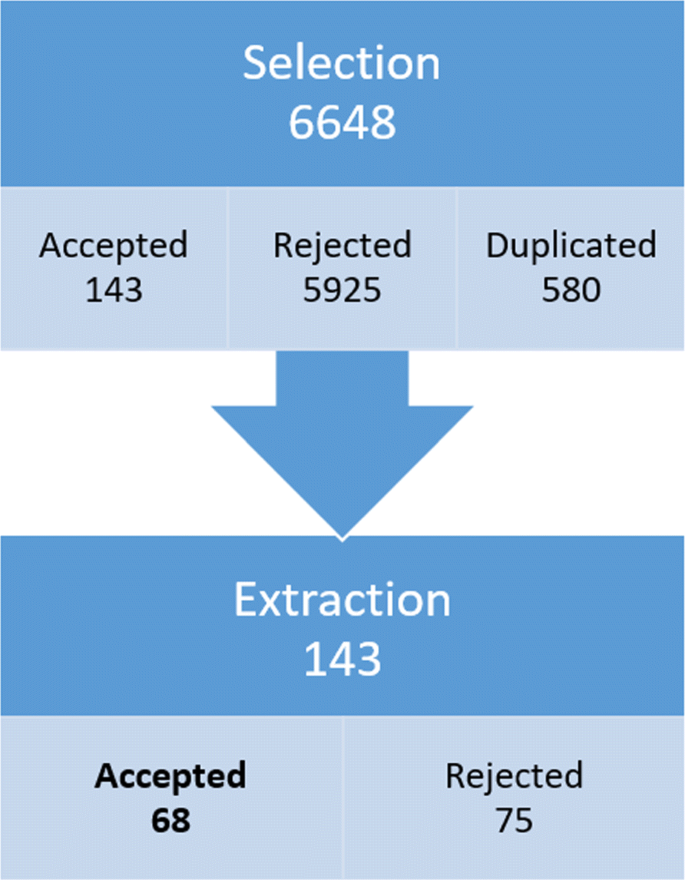

After executing the search strings in each of the sources of information, a total of 24,005 studies were found. The search reported too many results diverging from the objective of the research in two cases: Search String 2 of Science Direct and two Search Strings of the Springer Link. Thus, some filters were considered to obtain results closer to the objective of the work. At Science Direct, the search was filtered to find studies that presented the search string words in the title, abstract, or keywords. In Springer Link, the studies that were of the discipline of computer science and had software engineering as a subdiscipline were filtered. Thus, after applying the filters, we found 6648 as can be seen in Table 1.

All information extracted from the 68 found studies is presented in the “Results” section. We analyzed the number of publications per year, the number of publications per country, the main conferences in which articles were published, and the main authors in the area. The main contribution of this information was the inference of which are the groups that publish most in the area of SMS.

The “Analysis and discussion” section presents the analysis performed in the 80 tools found in the systematic mapping conducted in this work. In this analysis, we discussed which are the used testing techniques, which tools generate and/or execute test cases, test case generation strategy, which sensor data each tool considers, test approach and whether the tool is available for download. This information allowed us to answer the SMS research questions.

There are validity threats associated with all phases during the execution of this SMS. Thus, this section discusses the threats and possible mitigation strategies according to each SMS phase.

We may have excluded studies during the search due to various reasons such as personal bias; this may negatively impact on the SMS result. The following strategies were used to reduce risks.

Another threat is that we may have missed primary papers published before the year 2008. The Android operating system was publicly released on September 2008; since the research is directed to the Android operating system, we do not believe there are any publications in the research area before 2008.

Personal bias may decrease the quality of the extracted data from the studies (e.g., the incompleteness of the extracted data). The strategy used to mitigate this threat is the conduction of weakly meetings where we discussed the following:

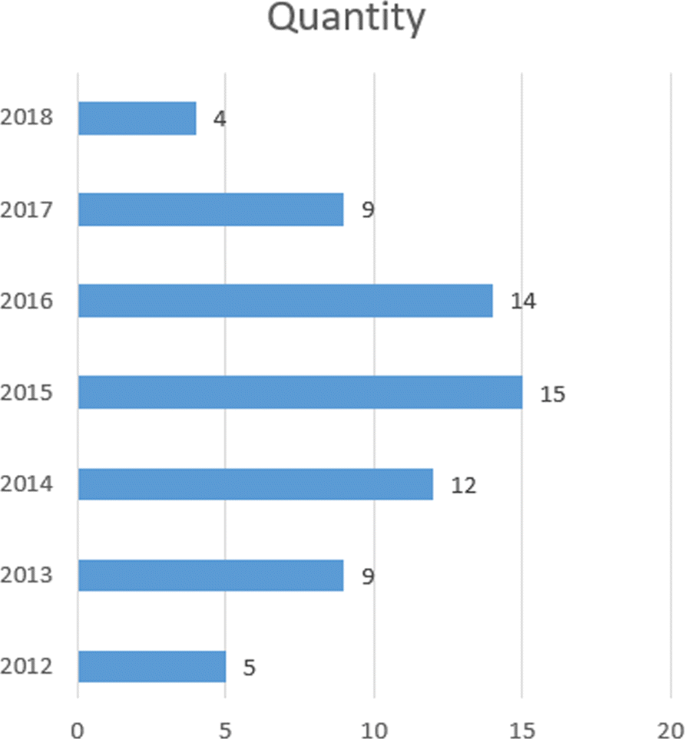

The systematic mapping performed resulted in the 68 studies presented in Table 3. We can see that they have been published since 2012 (Fig. 3). The largest number of studies (60.3% of the total) was published in 2014, 2015, and 2016. In 2017 and 2018, the number of publications has decreased, which supposes the beginning of disinterest in the area (Fig. 3). However, the systematic mapping focused only on studies on Android testing tools, and thus, we cannot say that the number of studies on Android testing has diminished.

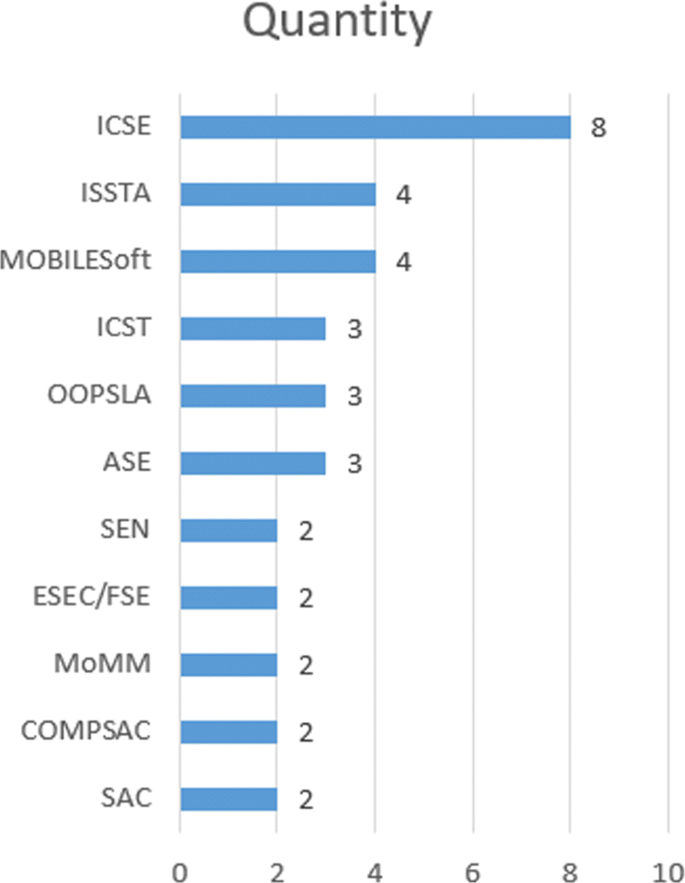

Figure 5 illustrates the major conferences in which all accepted studies were published. The International Conference on Software Engineering (ICSE) is the event that most accepted studies related to our SMS, 11.8% of all of them.

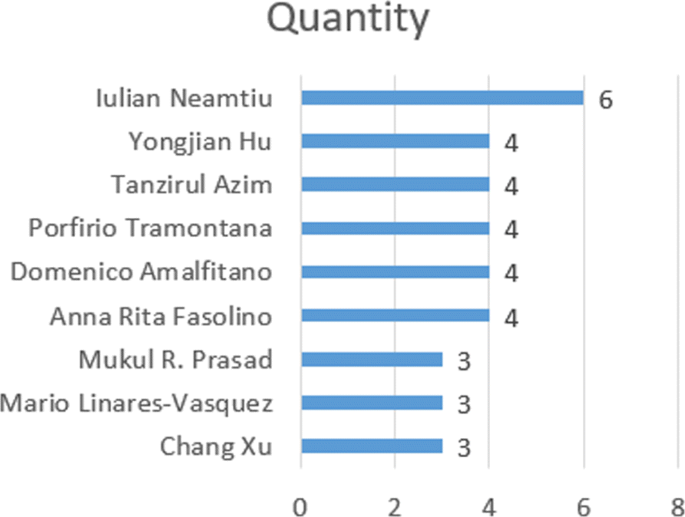

Figure 6 shows the authors who most published articles in the research area of this systematic mapping. Analyzing the Google Scholar [102] profile of each of the authors, we can see that Iulian Neamtiu Footnote 9 posted 21 articles on Android application testing, Anna Rita Fasolino Footnote 10 posted 9, Domenico Amalfitano Footnote 11 posted 12, Porfirio Tramontana Footnote 12 posted 9, Tanzirul Azim Footnote 13 posted 11, and Yongjian Hu Footnote 14 posted 10. In addition, considering the studies accepted in this systematic mapping, we noticed that Iulian Neamtiu, Yongjian Hu, and Tanzirul Azim published 6 articles in which at least two of them wrote together and Domenico Amalfitano, Anna Rita Fasolino, and Porfirio Tramontana published 4 articles together. Thus, from the number of articles about Android application testing and the number of articles written together, we can identify two research groups that have a significant amount of published work in the scope of this study.

From the systematic mapping, we found 68 studies about Android testing tools. The “Results” section presented an overview of the found studies. In this section, we will answer and discuss the research questions elaborated during the systematic mapping planning.

From the 68 found studies presented in the “Results” section, we identified 80 tools (Table 4). The tools were analyzed and classified with respect to following:

For the sake of space, the table with the complete classification of the attributes can be found here Footnote 15 .

Each tool tests Android applications through its implemented technique. Table 5 shows the main types of testing techniques of the identified tools. GUI testing tools are the most common ones. We found 32 tools that base their testing by identifying and exploring the interface elements to test the applications. These represent 40% of the total found tools. GUI testing tools use algorithms to identify interface elements such as text views, buttons, check boxes, and image views to generate and/or execute tests. Many tools use these graphical elements to construct state machines and thus determine the application behavioral model.

Table 5 Types of testing techniqueSome Android applications should be prepared to react to some events coming from the Android operating system such as low battery level, battery charging, incoming call, change of application in the foreground, airplane mode on/off, and so on. Moreover, the interaction between the user and many mobile applications does not occur exclusively through the interface elements. Many of the interactions can also be through sensors like GPS, gyroscope, compass, and accelerometer. Thus, some tools test Android applications not looking at the components of the interface, but rather through events generated for the application simulating the user touching the screen, events from the system, and also through data from the sensors of the device. For these tools, we call them system events testing tools because they interact with the application under test by stimulating events at the system level. We identified 17 system events testing tools that represent 21% of the total found tools.

In functional testing, as important as finding a usage scenario that fails is to be able to replicate it. Replicating a failing usage scenario allows us to identify whether the application defect has been fixed. With that in mind, record and replay testing tools were developed. These tools can record a user’s usage scenario and then run the same scenario as many times as the tester wishes. We identified 14 record and replay tools, representing 18% of the total found tools.

Many failures occur when executing a bug code. Consequently, the higher code coverage in a usage scenario, the greater the chance of finding bugs. For this reason, some tools test applications to maximize the amount of code covered. Among the tools found in this systematic mapping, 6 of them are code coverage tools.

Among the identified Android testing tools, 45 of them are capable of generating test cases. Each of these implements its own generation algorithms. Thus, 24 different test strategies were identified in the 45 tools that generate test cases. The most commonly used strategies are presented in Table 6.

Table 6 Types of generation strategyModel-based testing (MBT) is an approach to generate test cases using a model of the application under test. In this strategy, (formal) models are used to describe the behavior of the application and, thus, generate test cases. Among the identified tools that generate test cases, we observed that 20 of them use the MBT strategy to generate their test cases. The application models mostly describe the behavior of the application under test by identifying GUI elements and changing activity based on these elements.

The second most commonly used generation strategy is the GUI ripping; it has found seven tools that implement this strategy. GUI ripping is a strategy that dynamically traverses an app’s GUI and, based on its GUI elements, creates its state-machine model or a tree graph.

The third most used strategy by the found tools was the random strategy. A total of six tools implements a random strategy. Although it is a less ingenious strategy than the others, some studies point out that it is a very efficient strategy to find crashes [6].

Regarding the remaining data acquired from the questions presented at the beginning of this section about the characteristics of the tools (use of sensor data, test case generation, test case execution, download availability, and testing approach), Table 7 summarizes how many tools have each of them.

Table 7 Number of tools that presents a given characteristicAmong the studies found by the systematic mapping, Bernardo et al. [45] present an investigation on 19 open-source mobile applications for Android in order to identify how automated tests are employed in practice. They concluded that 47% of these applications have some kind of automated tests, and they observed that the most used testing tools were JUnit, Android.Test, Hamcrest, Robolectric, EasyMock, Robotium, and Fest. Finally, Bernardo et al. observed that the most important challenges in testing Android applications such as rich GUIs, limited resources, and sensors have not been properly handled in the automated tests of the analyzed 19 open-source applications.

Linares-Vásquez et al. [106] conducted a survey on 102 Android mobile developers about their practices when performing testing. One of the questions to be answered by the survey was “What tools do you use for automated testing?”. As a result, Linares-Vásquez et al. concluded that “The most used tool is JUnit (45 participants), followed by Roboelectic with 16 answers, and Robotium with 11 answers. 28 participants explicitly mentioned they have not used any automated tool for testing mobile apps. 39 out of 55 tools were mentioned only by one participant each, which suggests that mobile developers do not use a well-established set of tools for automated testing.”

Villanes et al. [107] performed a study using the Stack Overflow Footnote 16 with the intention to analyze and cluster the main topics of interest on Android testing. One of their results pointed out that recently, developers have shown increased interest in the Appium Footnote 17 , Espresso, Monkey, and Robotium tools.

Bernardo et al. did not present a significant amount of applications when compared to Vasquez et al. and Villanes et al. work. Thus, we can say, based on these studies, with greater certainty that the Robotium, JUnit, Roboelectic, Appium, Espresso, and Monkey tools are, according to Vasquez et al. and Villanes et al., the most used tools for testing Android applications.

In addition, we observed which of the studies identified in the SMS are the most cited in ACM, IEEE, and Google Scholar. Table 8 presents the studies and their respective tools that are most cited among the identified studies and tools.

Table 8 Most cited study and toolsFrom the 68 selected studies, Griebe and Gruhn [44], Vieira et al. [37], and Amalfitano et al. [42] explicitly focus on testing of context-aware applications. The “Custom-built version of the Calabash-Android” section, “Context simulator” section. and “Extended AndroidRipper” section discuss the found Android context-aware application testing tools investigated in these three studies. Besides that, these three studies cite the other two tools that also explicitly test context-aware applications: ContextDrive and TestAWARE. These tools are discussed in the “ContextDrive” section and the “TestAWARE” section, respectively.

Griebe and Gruhn [44] propose a model-based approach to improve the testing of context-aware mobile applications. Their approach is based on a four-tier process system as follows:

To assess the proposed approach, Griebe and Gruhn have extended the Calabash Footnote 18 tool to implement it. Calabash is a test automation framework that supports the creation and execution of automated acceptance tests for Android and iOS apps without the necessity of coding skills [108]. It works by enabling automatic UI interactions within an application such as pressing buttons, inputting text, validating responses, and so on.

Calabash is a completely free and open-source tool. It uses the Gherkin pattern. Gherkin is a writing pattern for an executable specification that, through keywords, maintains a standard for the writing of execution criteria called Given, When, and Then. In order to do so, Calabash expresses the test cases as cucumber features [109].

A limitation of the Griebe and Gruhn approach is the need for creating a model that describes possible AUT activities. Modeling is not a widely understood activity between testers and developers, and a poorly designed model can lead to false positives or false negatives in test verdicts.

Vieira et al. [37] argue that testing context-aware applications in the lab is difficult because of the number of different scenarios and situations that a user might be involved with. Hence, the Android platform provides simulation tools to support the physical sensor test. However, it is not enough to test context-aware applications only at the physical sensors level. Considering that, Vieira et al. have developed a simulator that simulates a real laboratory environment. The simulator provides support for modeling and simulation of context in different levels: physical and logical context, situations, and scenarios.

The simulation is separated into two main components: the desktop application and the mobile component:

The modeling in the context simulator is made in four different levels:

The context simulator supports a large variety of context sources, 22 contexts divided into 6 categories supporting 41 context sources [37].

A limitation of the context simulator is that the tester must model each test case. That is, if the tester wishes to test an AUT under possible adverse situations such as weak GPS signal, receiving a phone call and changing Internet connection conditions, then the tester should model all scenarios that he/she wishes to test.

Amalfitano et al. [42] analyzed bug reports from open-source applications available at GitHub Footnote 19 and Google Code Footnote 20 . From the results, they defined some use scenarios, called by them as event-patterns, that represent a use case which presents more potential to failure in context-aware applications. Some examples of event-patterns are as follows:

Amalfitano et al. carried out an experiment with the objective of examining if, in fact, the event-patterns represent scenarios of a greater chance of context-aware application failures. Thus, they extended the tool AndroidRipper [43]. The extended AndroidRipper is able to fire context events such as location changes, enabling/disabling of GPS, changes in orientation, acceleration changes, reception of text messages and phone calls, and shooting of photos with the camera. Both versions of AndroidRipper explore the application under test looking for crashes measuring the obtained code coverage and automatically generating Android JUnit test cases that reproduce the explored executions.

The extended AndroidRipper tool generates test cases watching for events that cause a reaction from the application. Once the events that cause a reaction are detected, the technique of Amalfitano et al. generates test cases based on event-patterns identified by the authors. Therefore, the tool does not focus on testing high-level context variations.

By studying the papers of Griebe and Gruhn [44], Vieira et al. [37], and Amalfitano et al. [42], we found two papers related to testing context-aware Android applications: Mirza and Khan [110] and Luo et al. [111]. The corresponding tools are presented in the sequel.

Mirza and Khan [110] argues that testing context-aware applications is a difficult task due to challenges such as developing test adequacy and coverage criteria, context adaptation, context data generation, designing context-aware test cases, developing test oracle, and devising new testing techniques to test context-aware applications. In response to these challenges, they argue that context adaptation cannot be modeled using a standard notation such as the UML activity diagram. Therefore, Mirza and Khan extended the UML activity diagram, by adding a context-aware activity node, for behavior modeling of context-aware applications.

Mirza and Khan proposed a test automation framework named as ContextDrive. Its proposed model consists of six phases.

Mirza and Khan’s technique is similar to the one implemented in the tool of the “Custom-built version of the Calabash-Android” section. Therefore, there is also the restriction that the tester has experience in UML activity diagram modeling. In addition, the tool uses static data to execute test cases. Therefore, testing situations that use a lot of sensor data becomes infeasible (i.e., testing a GPS navigator application).

One of the difficulties in testing context-aware applications is the heterogeneity of context information and the difficulty and/or high cost of reproducing contextual settings. As an example, Luo et al. [111] present a real-time fall detection application; the application detects when the user drops the mobile phone under different circumstances such as falling out of the pocket or falling out of the hand. The application is programmed to send an email to a caregiver every time a fall event is detected by the phone. For this application, testing new versions of the application is very costly. Thus, Luo et al. [111] introduce the TestAWARE tool.

TestAWARE is able to download, replay, and emulate contextual data on either physical or emulators devices. In other words, the tool is able to obtain and replay “context” and thus provide a reliable and repeatable setting for testing context-aware applications.

Luo et al. compare their tools with other available tools. In summary, they say TestAWARE aims at a wide variety of mobile context-aware applications and testing scenarios. It is possible because TestAWARE incorporates heterogeneous data (i.e., sensory data, events, and audio), multiple data sources (i.e., online, local, and manipulated data), black-box and white-box testing, functional/non-functional property examination, and the environments of device/emulator.

A limitation of the tool is that it is not possible to create test cases without executing each test case at least once in a real device in the real scenario. That is because it is a record and replay tool, it is first necessary to record the test cases and, therefore, it is necessary to submit the AUT on a real device under each of the conditions to be tested.

Among the found studies, Moran et al. [18, 52], Qin et al. [75], Yongjian and Iulian [71], Gomez et al. [81], and Farto et al. [83] present tools that were not intended for context-aware application testing. However, they support the testing of context-aware features.

Moran et al. [18, 52] argue that one of the most difficult and important maintenance tasks is the creation and resolution of bug reports. For this reason, they introduced the CrashScope tool. The tool is capable of generating augmented crash reports with screenshots, crash reproduction steps, and captured exception stack trace, along with a script to reproduce the crash on a target device. In order to do so, CrashScope explores the application under test by performing input generation by static and dynamic analyses which include automatic text generation capabilities based on context information such as device orientation, wireless interfaces, and sensors data.

The CrashScope GUI ripping engine systematically executes the application under test using various strategies. Then, the tool first checks for contextual features that should be tested according to the exploration strategy. So, the GUI ripping engine checks if the current activity is suitable for exercising a particular contextual feature in adverse conditions. The testing of contextual features in adverse conditions consists in setting unexpected values to the sensors (GPS, accelerometer, etc.) that would not typically be possible under normal conditions. For instance, to test the GPS in an adverse contextual condition, CrashScope sets the value to coordinates that do not represent physical GPS coordinates. In other words, for each running Activity, CrashScope checks what are the possible contextual features, checks if contextual features should be enabled / disabled, and sets feature values. CrashScope attempts to produce crashes by disabling and enabling sensors as well as sending unexpected (e.g., highly unfeasible) values. Because of that, there are scenarios that cannot be tested in CrashScope (i.e., testing if the application crashes if the user leaves a pre-established route).

Accordingly to Qin et. al. [75], MobiPlay is the first record and replay tool that is able to capture all possible inputs at the application layer, that is, MobiPlay is the first tool capable of recording and replaying, at the application layer, all the interactions between the Android app and both the user and the environment the mobile phone is inserted into.

While the user is executing the app, MobiPlay records every input the application receives and the interval time between every two consecutive inputs. After that, the tool can re-execute the application under test with the same provided inputs when executing it. The expected result is that the application behaves exactly the same way as the original execution. Basically, MobiPlay is composed of two components: a mobile phone and a remote server. Initially, the mobile phone sends and saves all sensor data and user interactions to the remote server that also stores it. From there, the remote server can reproduce the executed scenario by sending back the saved data to the mobile phone.

The application under test is called target app, and it is installed at the remote server, not at the mobile phone. The communication between the mobile phone and the target app will be done through the client app. The client app is installed at the mobile phone and it is a typical Android app that does not require root privilege and is dedicated to intercepting all the input data for the target app. The basic idea of MobiPlay is that the target app actually runs on the server, while the user interacts with the client app on the mobile phone in a way that the user is not explicitly aware that he is, in effect, using a thin client. The client app shows the GUI of the target app in real-time on the mobile phone just like the way as if the target app was actually running on the mobile phone. While the user interacts with the target app through the client app, the server records all the touch screen gestures (pinch, swipe, click, long click, multi touches, and so on) and the other inputs provided by the sensors like gyroscope, compass, GPS, and so on, in a transparent way to the user. Once the inputs are recorded, MobiPlay can re-execute the target app with the same inputs and at the same interval time, simulating the interaction between the user and the target app.

Just like the TestAWARE tool (the “TestAWARE” section), MobiPlay first needs to record the test cases that the tester wants to check.

VersAtile yet Lightweight rEcord and Replay for Android (VALERA) is a tool capable of record and replay Android apps by focusing on sensors and event streams, rather than system calls or the stream instruction. Its approach promises to be effective yet lightweight. VALERA is able to record and replay inputs from the network, GPS, camera, microphone, touchscreen, accelerometer, compass, and other apps via IPC. The main concern of the authors is to be able to record and replay Android applications with minimal overhead. Therefore, they claim to be able to maintain performance overhead low, on average 1.01% for record and 1.02% for replay. The timing overhead is very important when replaying an application. The variation of the original time of the application data entries can cause different behavior than when recording the iteration data with the application. For this reason, VALERA is designed to minimize timing overhead. In order to evaluate VALERA, the tool was exercised against 50 applications with different sensors. The evaluation consisted in exercising the relevant sensors of each application, e.g., scanning a barcode for the Barcode Scanner, Amazon Mobile, and Walmart apps; playing a song externally so that apps Shazam, Tune Wiki, or SoundCloud would attempt to recognize it; driving a car to record a navigation route for Waze, GPSNavig.&Maps, and NavFreeUSA; and so on.

VALERA has the same limitations as TestAWARE and MobiPlay.

It is a black-box record and replay tool capable of capturing the low-level event stream on the phone, which includes both GUI events and sensor events, and replaying it with microsecond accuracy. RERAN is a previous record and replay system of the authors of VALERA. It is similar to VALERA but with some limitations. RERAN is unable to replay sensors whose events are made available to applications through system services rather than through the low-level event interface (e.g., camera and GPS). When validating the tool, the authors declare RERAN was able to record and replay 86 out of the Top-100 Android apps on Google Play and to reproduce bugs in popular apps, e.g., Firefox, Facebook, and Quickoffice.

RERAN has the same limitations as TestAWARE, MobiPlay, and VALERA. Another limitation of RERAN is that it does not support testing the GPS sensor.

Farto et al. [83] proposed an MBT approach for modeling mobile apps in which test models are reused to reduce the effort on concretization and verify other characteristics such as device-specific events, unpredictable users’ interaction, telephony events for GSM/text messages, and sensors and hardware events.

The approach is based on an MBT process with Event Sequence Graphs (ESGs) models representing the features of a mobile app under test. Specifically, the models are focused on system testing, mainly user’s and GUI’s events. Farto et al. implemented the proposed testing approach in a tool called MBTS4MA (Model-Based Test Suite For Mobile Apps).

MBTS4MA provides a GUI for modeling. Thus, it supports the design of ESG models integrated with the mobile app data like labels, activity names, and general configurations. Although the models are focused on system testing, mainly user’s and GUI’s events, it is also possible to test sensors and hardware events. The supported sensor events are change acceleration data, change GPS data, disable Bluetooth, enable Bluetooth, and update coordinates. However, the authors argue that it is possible to extend the stereotypes of the tool to support more sensor events.

Just like the custom-built version of the Calabash-Android tool (the “Custom-built version of the Calabash-Android” section), MBTS4MA needs the creation of a model that represents the features of a mobile app under test.

In order to answer this research question, we have observed the publications of the authors of the Android context-aware testing studies, such as Griebe and Gruhn [44], Vieira et al. [37], and Amalfitano et al. [42].

The authors of Griebe and Gruhn [44] are Tobias Griebe Footnote 21 and Volker Gruhn Footnote 22 . Both authors have written only two more publications that refer to context-aware applications:

The authors of Vieira et al. [37] are Vaninha Vieira Footnote 23 , Konstantin Holl Footnote 24 , and Michael Hassel Footnote 25 . Vaninha Vieira is a professor of Computer Science at Federal University of Bahia, Brazil. Her research interests include context-aware computing, mobile and ubiquitous computing, collaborative systems and crowdsourcing, gamification and user engagement, and smart cities (crisis and emergency management, intelligent transportation systems). Among her publications, we can note the interest in mobile applications concerning to context modeling, quality assurance, context-sensitive systems development, context management, and so on. Konstantin Holl has published papers related to quality assurance, but nothing can be seen about the research interest of Michael Hassel due to the lack of publications.

The authors of Amalfitano et al. [42] are Domenico Amalfitano Footnote 26 , Anna Rita Fasolino Footnote 27 , Porfirio Tramontana Footnote 28 , and Nicola Amatucci Footnote 29 . Domenico Amalfitano, Anna Rita Fasolino, and Porfirio Tramontana are not only professors of the same institution (University of Naples Federico II) but also most of their articles were written together. Their publication concerns software engineering, testing, and reverse engineering. Many of the testing publications are about Android app testing. In particular, they have a lot of experience in the GUI ripping technique. Most of Nicola Amatucci’s publications are about testing on Android applications. Most of them written together with Domenico Amalfitano, Anna Rita Fasolino, or Porfirio Tramontana.

All of these authors have significant publications regarding mobile application testing. Besides them, as mentioned in the “Results” section, we can refer to Iulian Neamtiu, Tanzirul Azim, and Yongjian Hu who have great contributions in the research area. However, among the studied authors, Vaninha Vieira is the author who most directly contributed to the research on context-aware applications.

In this paper, we identify five tools for testing of context-aware Android applications and five tools that support testing of context-aware applications, totaling 10 tools.

The context-aware application testing has challenges such as a wide variety of context data types and context variation. There is a huge variety of context data types. The most commonly used context data type is location, acquired by the GPS sensor. However, there are many other types of data, such as temperature, orientation, brightness, time, and date.

Context-aware applications use context data provided by sensors to provide service or information. Waze Footnote 30 , for example, uses the GPS, the time, and information provided by the cloud to inform the driver about obstacles along the way to the final destination. However, many context-aware applications use combinations of sensor information to infer contexts and, from these inferred contexts, provide service or information. Vieira et al. [37] call low-level context the context information that is directly collected from sensors or from other sources of information such as database or cloud, and high-level context for the contexts that are the product of the combination of low-level contexts.

Many context-aware applications use high-level context to provide their services or information. Samsung has developed an application called Samsung Health [114] that tracks user’s physical activities. Combined with its Smart Watch, the application monitors heartbeat, movement, steps, geographical location, time of day, and other information. From this information, the application infers contexts in which the user is and then concludes whether the user is practicing physical activity or whether he is at rest. Taking the example of the Samsung Health application, Table 9 exposes some examples of high-level contexts from the composition of low-level contexts.

Table 9 High-level context examplesAs we have said, another challenge in testing context-aware applications is the constant variation of context. The context changes asynchronously and the application must respond correctly and effectively to context variations. Taking Samsung Health as an example, the application must realize when the user is changing their activities throughout the day and thus provides all the information and services in the correct way. Thus, when the user is sleeping and getting up, the application should stop counting the time and the quality of sleep. If the user starts walking, the application must count for time, distance, and lost calories. When the user stops walking and gets in the car and drives to home, the application should stop counting the walking information and understand that the user is at rest, even though he is moving.

Considering the difficulties of testing context-aware applications, the 10 tools identified in this work were analyzed and compared according to 11 questions raised:

Table 10 presents the result of the analysis of the 10 tools by looking at the 11 questions.

Table 10 Tools comparisonThe first observation we had of the tools was on the type of context data they support. With the exception of RERAN, they all support GPS. It was natural to expect this result since location is the most commonly used data type by context-aware applications. We can also see that Context Simulator and ContextDrive are the only tools that support all low-level context data types. In addition, these are the only tools that support high-level context data.

Mirza and Khan [110] propose an extension of the UML activity diagram for modeling high-level context variation. Thus, their ContextDrive tool can test variations from one context to another. Authors use static data to execute the test cases. Therefore, the tool is unable to generate new test cases automatically.

The Context Simulator tool provides a graphical interface for the tester that enables the creation of application usage scenarios. Thus, it is possible for the tester to simulate high-level contexts. To do this, the tester explicitly describes each test case he/she wants to execute as well as which sensor values are going to be used in the test.

Context variations occur asynchronously and some of them in a totally unexpected way. When using a context-aware application, a phone call can be received and, during the calling, the user context may change. As another example, it is possible for the GPS signal to drop and then return after a few moments.

Although three tools support context variation testing, none of them is able to automatically generate test cases that use high-level contexts and test variations of high-level contexts, taking into account unexpected scenarios such as the event-patterns described by Amalfitano et al. [42], presented in the “Extended AndroidRipper” section.

In this work, a systematic mapping was carried out in order to identify and investigate tools that allow the automation of testing Android context-aware applications. A total of 6648 studies were obtained, 68 of which were considered as relevant publications when taking into account our research questions. These works were first analyzed according to the conference publication, year, country, and authors. The main result of this first analysis was the identification of research groups in the area of interest.

Another important contribution of this work was the identification of 80 Android application testing tools. From these tools, we identified which techniques they implement, which generate test cases, which execute test cases, which are the test methods, which ones are available for download, and which ones are most commonly used. We noticed that 40% of Android testing tools implement GUI testing. We also note that, among the tools that generate test cases, 42% use MBT as the generation strategy approach.

The main contribution and objective of this work was the identification of context-aware Android application testing tools and the analysis of their limitations. We have identified 10 tools that support the test of context-aware applications. Five of these tools have been developed explicitly for context-aware applications, and five have been developed for general applications, but they also support context-aware features testing.

In our work, we have not done any experimental studies to evaluate other features of the tools such as test case generation time and number of revealed failures. This would require access to all tools, but 3 of the 10 tools are not available for download. Therefore, as future work, we will continue trying to gain access to the tools we could not download to test the same applications using the 10 found tools. This execution may reveal behaviors or characteristics that the authors of the tools have not expected, as well as giving more richness to the evaluation conducted in our work. We will also investigate techniques for generating test cases for context-aware applications that use high-level context with support for asynchronous context variation. From the identified techniques, we will define models and algorithms that allow automatic generation of test cases with asynchronous context variation, possibly using scenarios that are more likely to fail.